I recently collaborated on writing a new end-to-end (E2E) test suite for ArcGIS Hub.

Let me define what I’m talking about. E2E tests usually take the form of automated GUI tests that run against a live system.

In the web context, an end-to-end test runner often remotely automates a browser. That means that classic unit testing tools — mocking, stubbing, access to internal state, etc. — are generally unavailable.¹

This makes end-to-end tests very high-fidelity but also introduces potential pitfalls. Here’s my advice.

Don’t Write Them Without Defining the Reason

Why are you writing these tests? In this paper², researchers identified two main reasons developers write these and other GUI tests:

- Automated acceptance testing (an encapsulation of customer expectations).

- Automated regression testing (preventing regression errors).

So which one is it for you? Or is it another reason altogether?

Articulate your goal and make sure your team is on the same page about this. This ensures that everyone understands exactly what the purpose and value of these tests will be.

Don’t Duplicate Coverage

Let’s say you’ve created a new UI component. If you can cover the functionality in a unit test (or whatever your particular framework calls it), do it there!

Unit tests are generally easier to maintain, less flakey, and less expensive to run in your CI pipeline.

E2E tests should be covering the areas only they can cover. Usually, these are the big-picture user stories that span many components and views. We’re talking about big, high-value flows, like:

- Signing up.

- Logging in and out.

- Creating a new <whatever users create in your app> and sharing it.

- Updating a user’s profile information.

Don’t Use a Single-Layer Architecture

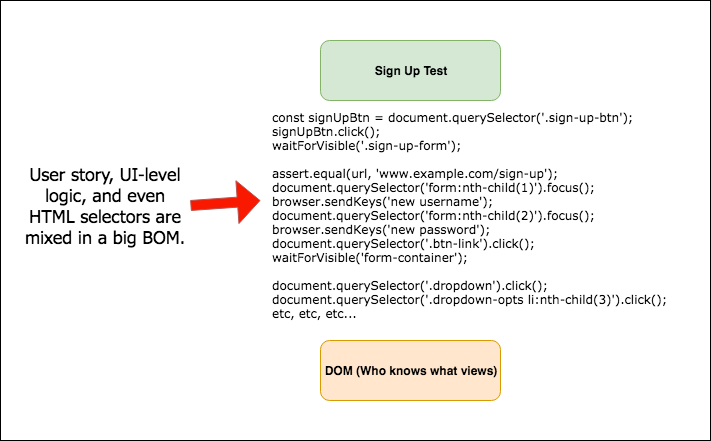

In a web context, a single-layer E2E test architecture would look like this (so this is what to avoid):

If you use a single-layer architecture for your E2E test suite, you are in for a world of pain.

Specifically, you (and others) will find:

- Tests difficult to understand and, hence, to debug. Mixing high-level and low-level logic will introduce way too much detail into the tests.

- Relatively unimportant UI changes will break lots of tests. This is because logic and HTML selectors will have been massively duplicated, creating multiple points of failure.

- Large swaths of your test suite will need to be updated when the UI changes.This goes along with the previous point.

- You won’t be able to reuse portions of test logic.This will create extra effort and headaches.

- My voice may pop into your head… laughing at your pain.

Instead, use a multi-layer architecture that allows the tests themselves to be understandable expressions of user stories. Hide area-specific logic and selectors in mediating layers.

Specifically, I recommend using the Page Object Model. See Martin Fowler’s article³ to learn more.

Don’t Use Breakable Selectors

This section is specifically geared toward my specialty, web development, but the principle of using robust methods to get hold of the right UI element is always applicable.

In the web context, there are several ways to find a particular element and they aren’t all equally impervious to changes in the UI.

Let me rate them in order of break-ability.

- The CSS selector (e.g.

.sign-in-btn). Classes are liable to change, so these are liable to break. - The

:nth-child()selector. Orderings of elements at the same hierarchical level seem to be subject to reordering. In addition, these selectors are often non-descriptive, making error messages from your test runner harder to understand. - The scoping selector (e.g.

.img-view .container .img-wrapper img). This one seems less likely to change than the previous two because element hierarchy is more immutable. However, this one is also usually mixed with CSS selectors. - The ID selector (e.g.

#thumbnail). It seems like IDs almost never change, but they can. There may also be other reasons to avoid adding them to elements, such as if the UI framework you are using inserts and relies on them internally. - The

data-test=”some-unique-slug”attribute. This is an attribute added to the target element, solely to give the test a handle. It can be as descriptive as you want and, as long as the value is unique, the test will be able to find it. It is totally impervious to changes in layout, ID, styles, and even element type. Also, most developers/UX people know not to mess with it. This one is the silver bullet.

I’m not going to preach hellfire against CSS selectors. It is up to you which selectors you choose to trust.

However, if it was up to me, I would choose data-test attributes every time. When my E2E tests fail, I don’t want it to be because of a style change.

Don’t Expect Your Suite to Be Maintenance-Free

When you create an E2E test suite, it becomes a reflection of your app’s user interface.

How often will that UI change in the next year? That’s right. All the time. So, the reflection has to evolve with it. You and your team need to accept and embrace this fact of end-to-end testing.

Otherwise, you will likely find yourselves in an adversarial relationship with your test suite. That will cause them to become flaky, annoying, and ignored. If you allow that to happen, you will have wasted your effort!

Don’t Ignore Flaky Tests

In general, developers are prone to blame tests first and their changes second.

I have noticed that this is even more pronounced in the context of end-to-end testing. And, because E2E tests rely on live, external systems, it takes an extra effort to make sure they don’t randomly fail because of non-deterministic factors such as network conditions, current load on external services, etc.

The effort is worth it. If the tests are too flaky, developers won’t trust them. They just won’t. The tests will become annoying instead of helpful.

Each flaky test is different. The test may need to wait longer for a view to load, or it may need something else. Regardless, my advice is to either shore it up or remove it. Don’t leave it in there to tarnish the suite’s reputation.

Automatically reporting basic telemetry, such as run-length and failure/success, to a dashboard interface can aid in identifying problem tests. We use Elastic’s Kibana.

Conclusion

End-to-end tests can be powerful both as an articulation of user expectations and as a guard against regressions. I think they absolutely deserve a place in a developer’s toolkit.

Avoiding these pitfalls will help you to apply this useful tool with as much effectiveness as possible.

(Originally published on Medium.)

References

[1] Some frameworks such as Cypress do provide XHR request and DOM mocking. This can be very useful, but keep in mind that, while these features may reduce flakiness, whenever you mock an external system you are decreasing the fidelity of your test.

[2] Hellmann, T. D., Moazzen, E., Sharma, A., Akbar, Z., Sillito, J., & Maurer, F. (2014). An Exploratory Study of Automated GUI Testing: Goals, Issues, and Best Practices. (External link)

[3] Fowler, M. (2013). PageObject. Retrieved from https://martinfowler.com/bliki/PageObject.html.